Jürgen is a Technology Strategist at Dynatrace. He is a core contributor to the Keptn open-source project and responsible for the strategy and integration of self-healing techniques and tools into the Keptn framework. Getting things done by automating them drives his daily work. He is not only passionate about developing new software but also loves to share his experience, most recently at conferences on Kubernetes based technologies and automation.

Putting Chaos into Continuous Delivery - how to increase the resilience of your applications

About the speaker

Jürgen Etzlstorfer

Technology Strategist,

dynatrace

About the talk

We will discuss how you can construct continuous delivery pipelines that include chaos experimentation while simulating real-world load to test the resilience of applications. On top, we implement quality gates based on SLOs to ensure resilient applications are deployed into production.

Continuous Delivery practices have evolved significantly with the cloud-native paradigm. GitOps & Chaos Engineering are at the forefront of this new CD approach, with an ever-increasing pattern involving Git-backed pipeline definitions that implement 'chaos stages' in pre-prod environments to gauge SLO compliance. In this talk, we will discuss how you can construct pipelines that include chaos experimentation (mapped to declarative hypothesis around application steady-state) while simulating real-world load and implement quality gates to ensure resilient applications are deployed into production. All this - in a GitOps native manner. We will also demonstrate how you can include chaos tests to your existing CD pipelines without the need of rewriting them.

Transcript

Putting Chaos into Continuous Delivery

For yet another insightful session on day 2 of Chaos Carnival 2021, we had with us Jürgen Etzlstorfer, Technology Strategist from Dynatrace, to speak about "Putting Chaos into Continuous Delivery".

Etzlstorfer starts by explaining, "The reasons because of which applications fail and we are not able to attain resiliency are errors, outages of third-party components, infrastructure outages, etc. Testing in production is really expensive and risky, given the number of processes and our end-user experience getting affected. So, it is highly suggested that chaos testing should be shifted towards the early stages to the left, wherein we have our CI/CD Pipelines. It is almost like the production stage in terms of the hardening phase, staging environment, or even death environment."

EVALUATE AND IMPROVE RESILIENCY:

-

Performance/load tests are not enough. Chaos engineering is needed for you to verify how long can the application survive if there is a latency in the network or the CPU is saturated.

-

Establish a process of continuous execution of chaos.

-

Establish means to measure and evaluate resilience.

-

Use service-level objectives (SLOs) for defining the quality criteria, important goals in your microservices or your applications

-

Improve based on results by studying and analyzing them.

CHAOS IN CD: THE CLOUD NATIVE WAY

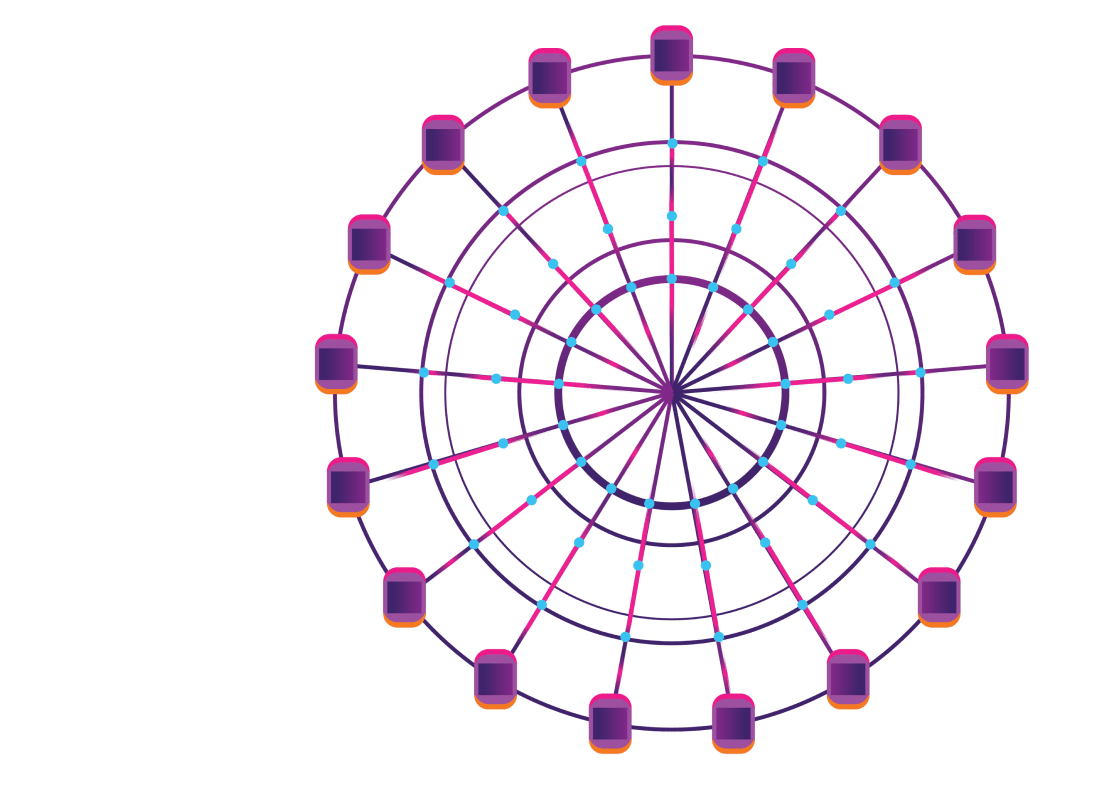

"We need some part of the chaos engine tool which will do chaos testing for us. An orchestrator or a CD tool will then trigger the chaos experiments. Post this, we will be requiring an evaluator which will evaluate the impact of our chaos experiments on applications. All these components should be declarative, scalable, and based on GitOps.

Etzlstofer defines Chaos Engineering as "It is the discipline of experimenting on a software system in production, to build confidence in a system's capability to withstand turbulent and unexpected conditions. There are many chaos testing tools such as Chaos Monkey, Simian Army, Gremlin, Chaos Mesh, LitmusChaos, etc."

LITMUSCHAOS: CLOUD-NATIVE WAY TO CHECK RESILIENCY

The whole process starts by identifying the steady-state conditions, and then the fault is introduced in the system. If the system's steady-state conditions are regained, then your system is resilient, and if it fails to regain, weakness in the system will be found.

The process in Litmus is built in a very cloud-native way. We have the Kubernetes Chaos Cluster and it picks up some custom resources. It also kicks off the chaos runner which is responsible for the execution of the chaos experiment. After the completion of the Chaos experiment, we have the Litmus ChaosResult CR which can be further used for studying and analyzing.

KEPTN: DATA-DRIVEN ORCHESTRATION FOR CD AND OPERATIONS

Cloud-Native application lifecycle orchestration, automating

-

Observability, dashboards, and alerting

-

SLO-driven multistage delivery

-

Operations and remediation

Keptn is declarative, extensible, and based on GitOps.

Declarative workflow definition (shipyard) is a declarative description of environments. It defines the sequences and tasks and helps in loose coupling between sequence/task and tooling. This stage is called the staging phase of the chaos test.

Jürgen adds, "Service Level Indicators/Service Level Objectives based evaluation is being implemented in Keptn. SLIs are defined per SLI Provider as YAML, for example, Prometheus Metrics Query. SLOs are defined on Keptn Service Level as YAML and have a list of objectives fixed on a relative pass and warning criteria.

DEMO AND ENVIRONMENT

In the demo, we will be using a simple CNCF application called Potato Head which will be deployed into the chaos stage using a HELM chart. In this chaos stage, Keptn will trigger the tests for us. First, we are going to trigger JMeter because we have to simulate real-world traffic so we can execute chain meter tests. Simultaneously, Keptn will be triggering Litmus integration which will kickstart the litmus tests. So here we are running two different tests. After execution, Keptn will be triggering the Quality Gate, which will be fetching useful metrics from Prometheus and will start to evaluate it. If the scores are good enough, it means resiliency based on SLOs is satisfactory otherwise it's not up to the mark.

SUMMARY

Jürgen concludes his talk by summarizing the talk, "Today, we learned about defining Service-Level Objectives for resilience, integrating resilience evaluation into (CI)CD Pipelines, and enabling continuous evaluation of resilience based on SLOs."

Videos

by Experts

Checkout our videos from the latest conferences and events