Julie Gunderson actively works to further the adoption of DevOps best practices and methodologies at PagerDuty. She has been actively involved in the DevOps space for over five years and is passionate about helping individuals, teams and organizations understand how to leverage DevOps & develop amazing cultures. Julie made a career developing relationships and building communities. She has delivered talks at conferences such as Velocity, Agile Conf, OSCON, and more.

The Psychology of Chaos Engineering

About the speaker

Julie Gunderson

DevOps Advocate,

PagerDuty

About the talk

We understand why chaos engineering works from a technical perspective, but what about the humans?

Chaos Engineering, failure injection, and similar practices have verified benefits to the resilience of systems and infrastructure. But can they provide similar resilience to teams and people? What are the effects and impacts on the humans involved in the systems? This talk will delve into both positive and negative outcomes to all the groups of people involved - including users, engineers, product, and business owners.

Using case studies from organizations where chaos engineering has been implemented, we will explore the changes in attitude that these practices create. This talk will include a brief overview of chaos engineering practices for unfamiliar members of the audience, but the main focus will be on human elements. I will discuss successful implementations, as well as challenges faced in teams where chaos was a 'success' from a technical perspective, but contained negative impact for the people involved.

After seeing this talk, attendees will have a better understanding of the human factors involved in chaos engineering, good practices to care for the people and teams working with chaos, and be even more excited about this practice.

Transcript

The Psychology of Chaos Engineering

"Chaos Engineering is less about the technicalities of what you should be doing and more about what you should be thinking while performing a chaos experiment." This is how Julie Gunderson started her talk on 'The Psychology of Chaos Engineering' at Chaos Carnival 2021.

Julie defines Chaos Engineering as the discipline of experimenting on a system to build confidence in the system's capability to withstand turbulent conditions in production.

"Netflix was the first entity to start the practice of Chaos Engineering through a tool called Chaos Monkey. Chaos Monkey intended to shut off the services in production which created the need for defensive coding. Now Chaos Engineering has evolved into so much more than just shutting things off, and has now become really about building a culture of resilience in the presence of unexpected system outcomes." Julie adds.

Resilience Engineering is about identifying and then enhancing the capabilities of people and organizations that allow them to adapt effectively and safely under various conditions.

"You can think of Chaos Engineering in terms of gamedays, it's all about experimentation. We are creating a hypothesis stating that we believe a certain thing is going to happen to our system which will make it more reliable. Having a hypothesis is important as it helps us tally the results and helps us in understanding what we have learned at the end of the day. Effective and efficient organizations are a budding environment for learning due to Chaos Engineering." she explains.

Incidents at Pagerduty are a form of a gift. As you already know, we as humans can not directly interact with our systems, we do it through a proxy and incidents are their way of talking to us. If we don't have to wait for that incident to take place, we are encouraging our systems to tell us things about themselves so that we can learn more and we do this by creating a hypothesis and testing it.

Some people have this confusion on when to introduce this chaos testing in their systems, should it be during production or not? Technically speaking, not all systems are adequate for chaos testing as it comes with risks. Aiming for 100% resiliency after your chaos testing is a fool's errand so 99.9% or 99.99% should be the ideal target because remember, behind that 0.01% there is a human. The better control and understanding we can have, the more resilient our systems and our teams will become.

Speaking about observability while doing experiments, we have to understand the impact that we will be creating, when to pull back on that experiment, work around it, and gain more knowledge about our systems and user experience. For this, we need to measure things such as the impact of downtime, minimizing blast radius, etc. The canaries are a great technique for running chaos experiments, and for production testing, you need to have an understandable blast radius.

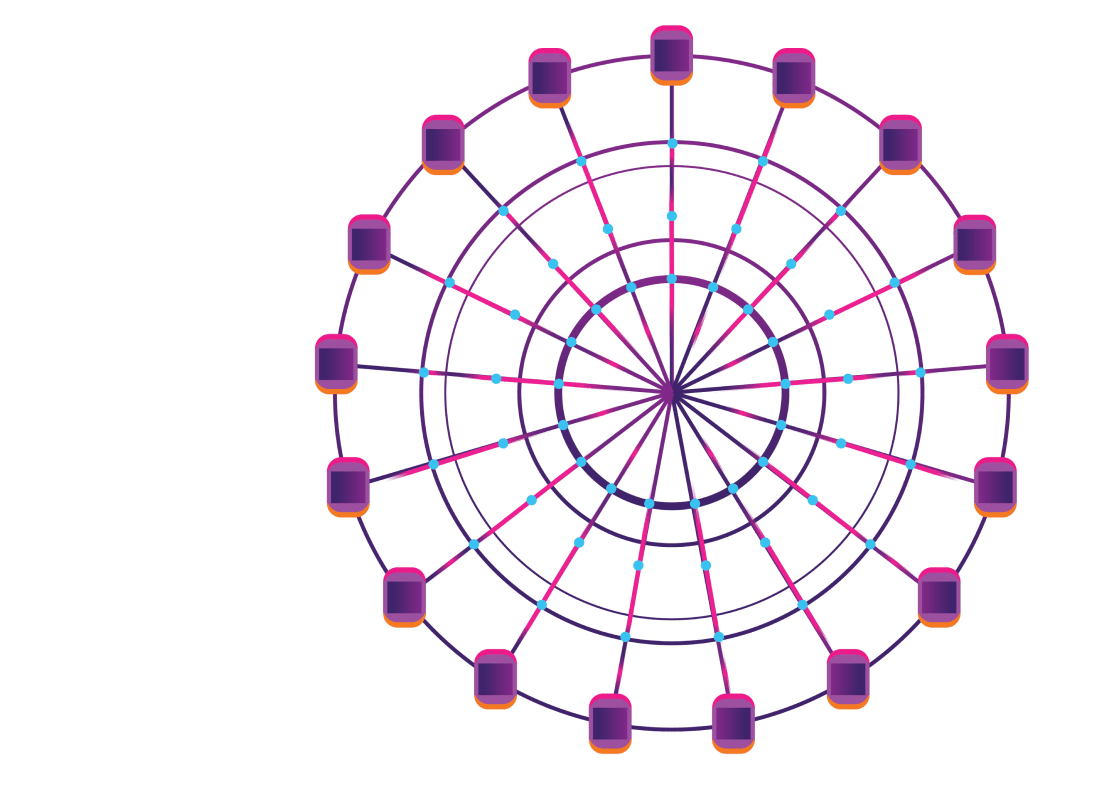

For example, if you see how Microsoft releases their software, they have this notion of rings, like concentric circles that they start off with by first releasing it for the people who have worked on the product, and then they start rolling it out to other teams inside Microsoft, and then eventually all of the company and the general public. They are learning more and more by expanding their blast radius and we can do the same with our chaos experiments.

DESIGNING THOUGHTFUL EXPERIMENTS:

-

Document the expected behavior after the hypothesis is made.

-

Identifying the possible or potential failures.

-

Going through the documentation will help us know what was the observed behavior.

-

Picking a time wherein the stakes are lower with minimal impact. For example, if you are a retailer, you must avoid testing on Black Fridays or during a sale season.

-

Keep an audit train for events.

-

Review the notification and response process.

FAILURE FRIDAYS AT PAGERDUTY:

Failure Fridays are a form of Chaos Engineering and not just an alliteration as they uncover implementation issues that reduce resilience. They are designed to proactively discover deficiencies so that we can avoid discrepancies becoming the root cause or a contributing factor of a future outage. We also look at these as an opportunity to build a stronger team culture by coming together as a team. We share knowledge of various departments so that at the end of the day, ops can know how the development team debugs production issues in their system and vice versa. Failures will occur, they are a part of the learning process. Failures are no longer a freak occurrence that can be ignored or explained. All the code that the engineering teams write is now tested against how it will survive during a Failure Friday.

"We injected our first Failure Friday in 2013 and we have learned a lot over these years. Injecting failure and continuously improving our infrastructure has not only helped us deliver better software but also build internal trust and empathy. Stress testing our systems and processes helps us understand how to improve our operations." Julie explains.

DON'T FORGET THE PEOPLE

Understandably, it's been proven that following the techniques will help us understand our systems better. It will help us in building more reliable and stable systems, that will, in turn, help us build resiliency in our organization. But human resource plays an important role too in making all this happen.

"One of my favorite quotes about Chaos Engineering is from Bruce Wong, Stitch Fix, which goes like 'Saying you are getting your systems ready for Chaos Engineering is like saying you are getting in shape to go to the gym.' You need to design your experiments and have a hypothesis but you also need to think about the organizational culture." Julie adds.

Corporate culture plays a crucial role in Chaos Engineering. One way to keep the culture going is to keep the behavior in check. Incentives can serve as a way to change behavior when people actively start participating and embracing Chaos Engineering. That's why we talk about learning, improving, and looking to build our systems in a way that can withstand failure. Additionally, you should be looking at Chaos Engineering as a way to make your incident management and incident response process better because it's a great way to make sure that you have things set up correctly. This is an opportunity to fine-tune your alerting and monitoring as well. Chaos experiment failures can be interesting too as you can make yourself aware of ways it DOES NOT work, and create a healthy learning environment for your peers in the process.

THINGS TO THINK THROUGH

- Know your conditions; timeline for when you start and end your experiments.

- Chaos experiments shouldn't become any kind of stress or burden for the teams, rather it should make for a safer environment to make mistakes for the first few times.

- There are humans at the end of those numbers so try to be as transparent as possible and provide them with recognition for notable achievements.

While concluding her talk, Julie mentions, "The key concepts that I would like you to take from here are building a hypothesis around steady behavior, varying real-world events, running experiments in production, automating experiments to run continuously, minimizing the blast radius, and taking care of the people during the whole process."

Videos

by Experts

Checkout our videos from the latest conferences and events