Chinmay is a Technical Evangelist at Epsagon with experience in data center and cloud technologies. Previously, he worked at companies such as Intel, IBM, and early-stage startups. Chinmay has a Master's Degree from Columbia University and an MBA from UC Berkeley.

Achieving Reliability in a Microservices-Based Environment using Observability

About the speaker

Chinmay Gaikwad

Technical Evangelist,

Epsagon

About the talk

The evolution of architecture from monolithic to microservices-based has enabled organizations to meet the ever-evolving needs of their customers. The need for getting insights into these microservices has become critical for developers and operations teams alike. However, achieving reliability in microservices-based environments is not trivial. In this session, we will explore how observability plays a critical role in the microservices world and Chaos Engineering.We will deep dive into distributed tracing to achieve full observability and monitoring for production environments. Finally, we will discuss the checklist that every DevOps person shouldlook into for incorporating observability into their environment.

Transcript

Achieving reliability in Microservices

For yet another enlightening session on day 1 of Chaos Carnival 2021, Chinmay Gaikwad talks about achieving reliability in a microservices-based environment using observability.

Chinmay starts his session by saying, "Today we will be talking about microservices and the challenges we face in today's scenarios, reliability in distributed environments, and building an observability strategy."

MICROSERVICES: THE NEW NORMAL AND NEW CHALLENGES

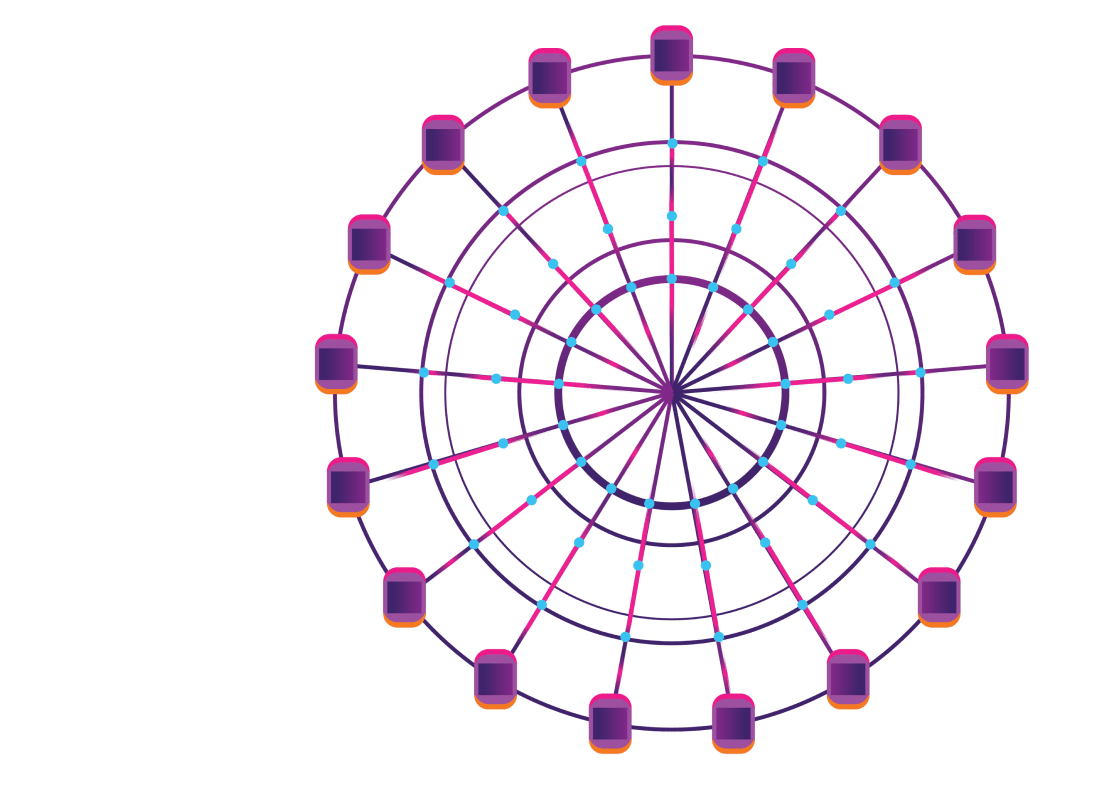

With the evolution of software, there has been a massive shift in architecture. Over 85% of enterprises are adopting microservices as their default architecture. More and more applications should be in the cloud, rather than being built on data centers on-premises, and are highly distributed and broken down into smaller and lightweight services which can be almost thought of as mini-applications. Each one of them runs independent of one another and there is no monolithic bundle of code.

Microservices architecture has great benefits, but these easy to depict architecture diagrams have become more complex and have brought a unique set of challenges.

Let's see why enterprises moved to microservices in the first place. According to Epsagon's 2020 report, it's because of scalability speedup development and degree system administration. It increases your speed up development and allows a lot more efficient utilization of your resources.

As we saw, modern applications now have thousands of services with databases, caches, etc., reliability has become increasingly complex using only traditional monitoring solutions. It can be nearly impossible to know what's going on under the hood. So, let's see how we can achieve reliability in such distributed applications.

Observability allows us to ask the why and how of a system's functioning. It essentially involves instrumenting systems and applications to collect relevant data thus, observability and monitoring are complementary to each other.

"In my opinion, observability is not just a tool, it must have a culture in any organization. It is important for all, right from C-Suite to developers." Chinmay adds.

The three pillars of observability are very well known:

- Metrics: Metrics are a great way for the operators to figure out if something has gone wrong for chaos engineering. The four golden signals for a successful chaos test are latency, traffic, errors, and saturation.

- Logs: Logs on the other hand tell us why something went wrong but when you have hundreds and even thousands of microservices, logs alone are not enough.

- Traces: A single trace shows the activity for an individual transaction or request as it flows through an application. Traces are a critical part of observability, as they provide context for another telemetry

Chaos Engineering exists so that we don't have to introduce chaos in our production environments. Chaos experiments with a good observability solution result in higher customer and employee satisfaction as well as an increase in focus on innovation instead of fighting unnecessary fights.

Chinmay explains, "Let's consider a simple example of a virtual shop for this session. Now, you want to modernize your application by continuing it so you can see the HTTP server authenticates requests using odd0 using odd0 pushes them to a Kafka stream a java container pulls the stream and updates the DynamoDB table. Let's say there is a situation where you observe that the orders were sent but not handled where would you start maybe using traditional APM tools traditional monitoring solutions come at the expense of higher resource utilization because of heavyweight and multiple agents can collect only host metrics or purely metrics-driven so that doesn't help pinpoint the problem the very nature of microservices means that this is likely to leave gaps in your observability strategy."

THINGS MISSING

Correlation helps us find the exact problem between metrics, logs, and other services. We can correlate these pieces of data by using distributed tracing. Many vendors offer some form of distributed tracing, even service meshes are now building support for it. There are dozens of open-source solutions. Distributed tracing was born out of Google over a decade ago, and it allowed engineers to trace a specific path, a request made through services. In effect, it helps to shine the light at the needle in the haystack that logging and metrics can miss.

OPEN TELEMETRY FRAMEWORK, OPEN-SOURCE TOOLING FOR DISTRIBUTED TRACING:

- OpenTelemetry framework

- Jaeger, Zipin

- Manual tracing requires heavy lifting: Instrumentation and Maintenance

- Lack visualizations, context, and tracing through middleware.

GENERATING TRACES WITH OPENTELEMETRY

- Instrument every call (AWS-SDK, HTTP, Postgres, Spring, Flask, Request, etc.)

- Create a span for every request and response.

- Add context to every span.

- Inject and extract IDs in relevant calls.

BEST PRACTICES FOR OBSERVABILITY

- Automated setup and minimum maintenance (lightweight agent).

- Support any environment (containers, Kubernetes, serverless, cloud).

- Connects every request in a transaction and helps us see the bottlenecks.

- Search and analyze your data and provide context to alert.

- Helps to quickly pinpoint problems by isolating microservices responsible for errors.

BENEFITS OF DISTRIBUTED TRACING:

- Visualize and Understand: A typical architecture has several microservices involved and one of the most important features of an observability solution is visualization. Users should also expect these complex visualizations and actionable data service maps. As we zoom in or filter on specific components the user should have the ability to start seeing latency between these components as well as the areas where thresholds have been crossed, metrics should also be present to provide some context to issues or alerts that are going out there. Service maps are also helpful to understand the impact of your chaos experiments.

- Bring focus to the problem: application of a distributed tracing solution should focus the visualization down to the narrow scope of services. his would help in determining the impact of an outage or your experiment or when the agency has been seen without smart filtering capabilities an architecture map becomes nothing more than an exercise.

"The journey to observability lies in identifying your business goals and architecture model, determining the approach during the beginning of a chaos experiment, implementing observability solutions, and ensuring the scalability of observability strategy," Chinmay adds.

While concluding his talk, Chinmay states," Today we have learned that distributed applications bring unique benefits and challenges, several advantages of using distributed tracing approach, observability is critical to keep track of the architecture, detect performance bottlenecks and reduce MTTR, and reduce chaos engineering efforts. Finally, I would like to say, be proactive rather than reactive!"

Videos

by Experts

Checkout our videos from the latest conferences and events